Web Notebook

This section describes how to use the interactive Apache Zeppelin Web Notebook.

Starting the Web Notebook

The Web Notebook can be started in any of the following ways:

- In

demomode, the Web Notebook is started automatically at localhost:9090 . See InsightEdge Script for information aboutdemomode. - Start the Web Notebook manually at any time by running the following command from the

<XAP HOME>/insightedge/bindirectory:

insightedge run --zeppelin

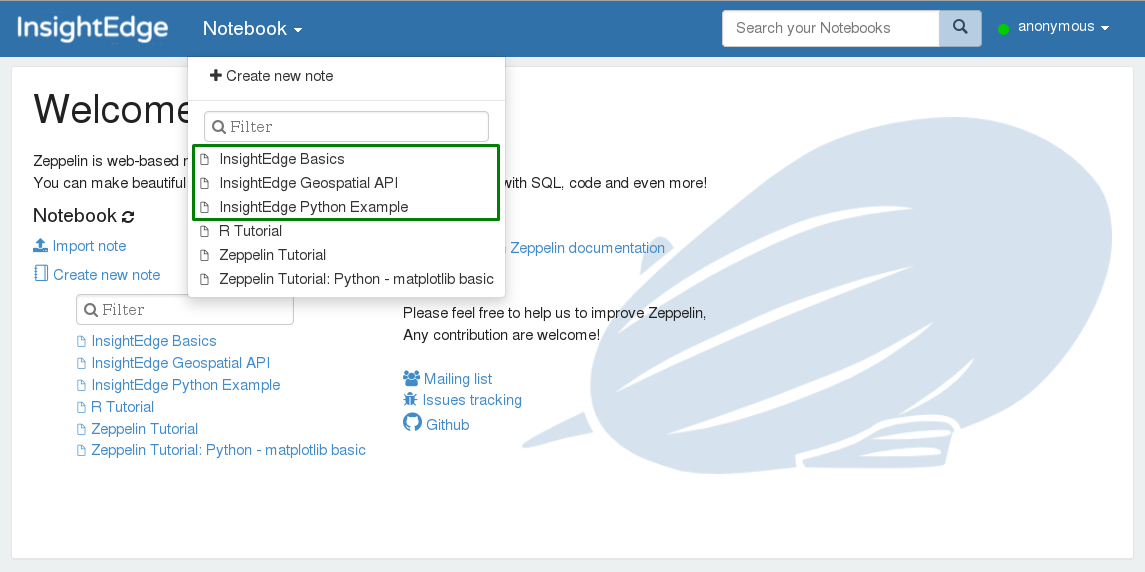

Once Zeppeling is running, you can browse to localhost:9090 and start playing with the pre-built notebooks:

Configuring the Web Notebook

InsightEdge-specific settings can be configured in Interpreter menu -> Spark interpreter. Important settings include the following properties for connecting Spark with the Data Grid:

insightedge.groupinsightedge.locatorinsightedge.spaceName

These properties are transparently translated into InsightEdgeConfig to establish a connection between Spark and the Data Grid.

Refer to Connecting to the Data Grid for more details about the connection properties.

Using the Web Notebook

The Web Notebook comes with example notes. We recommend that you review them, and then use them as a template for your own notes. There are several things you should take into account.

- The Data Grid model can be declared in a notebook using the

%defineinterpreter:

%define

package model.v1

import org.insightedge.scala.annotation._

import scala.beans.{BeanProperty, BooleanBeanProperty}

case class Product(

@BeanProperty @SpaceId var id: Long,

@BeanProperty var description: String,

@BeanProperty var quantity: Int,

@BooleanBeanProperty var featuredProduct: Boolean

) {

def this() = this(-1, null, -1, false)

}

%spark

import model.v1._

- You can load external .jars from the Spark interpreter settings, or with the

z.load("/path/to.jar")command:

%dep

z.load("./insightedge/examples/jars/insightedge-examples.jar")

For more details, please, refer to Zeppelin Dependency Management

- You must load your dependencies before you start using the

SparkContext(sc) command. If you want to redefine the model or load another .jar afterSparkContexthas already started, you will have to reload the Spark interpreter.