Network Considerations

Binding the Process to a Machine IP Address

In many cases, the machines that are running InsightEdge, XAP (i.e., a GSA, GSM, or GSC), or XAP client applications (such as web servers or standalone JVM/.NET processes) have multiple network cards with multiple IP addresses. To make sure that the XAP processes or the XAP client application processes bind themselves to the correct IP addresses (accessible from another machines) you should use the XAP_NIC_ADDRESS environment variable, or the java.rmi.server.hostname system property. Both should be set to the IP of the machine (or one of them if a machine has multiple IP addresses). Without specifying this environment/property, in some cases a client process might not be notified of events generated by the runtime environment or the space.

Examples:

export XAP_NIC_ADDRESS=10.10.10.100

./gs-agent.sh &

java -Djava.rmi.server.hostname=10.10.10.100 MyApplication

Using the above approach, you can leverage multiple network cards within the same machine to provide a higher level of hardware resiliency. You can also utilize the network bandwidth in an optimal manner, by binding different JVM processes running on the same physical machine to different IP addresses. One example of this would be four GSCs running on the same machine, where two of the them are using IP_1 and the other two are using IP_2.

For more information, refer to How to Configure an Environment With Multiple Network Cards (Multi-NIC)

Ports

GigaSpaces applications use TCP/IP for most of their remote operations. The following components require open ports:

| Service | Description | Configuration Property | Default value |

|---|---|---|---|

| RMI registry listening port | Used as an alternative directory service. | com.gigaspaces.system.registryPort System property | 10098 and above. |

| Webster listening port | Internal web service used as part of the application deployment process. | com.gigaspaces.start.httpPort System property | 9813 |

| [Web UI Agent]./web-management-console.html) | GigaSpaces XAP Dashboard Web Application. | com.gs.webui.port System property | 8099 |

Here are examples of how to set different LRMI listening ports for the GS-UI, and another set of ports for the GSA/GSC/GSM/Lookup Service:

export EXT_JAVA_OPTIONS=-Dcom.gs.transport_protocol.lrmi.bind-port=7000-7500

export EXT_JAVA_OPTIONS=-Dcom.gs.transport_protocol.lrmi.bind-port=8000-8100

A running GSC tries to use the first free port out of the port range specified. The same port might be used for several connections (via a multiplexed protocol). If the entire port range is exhausted, an error is displayed.

When there are several GSCs or servers running on the same machine, we recommend that you set a different LRMI port range for each JVM. Having 100 as a port range for the GSCs supports a large number of clients (several thousand).

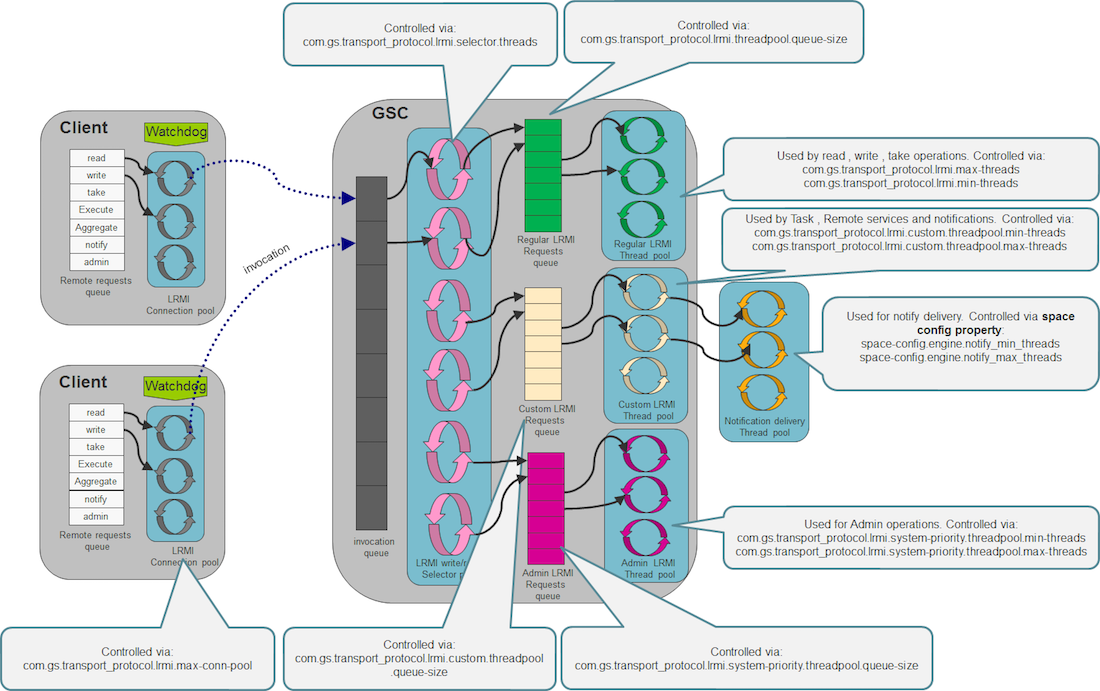

LRMI Connection Thread Pool

The default LRMI behavior opens a different connection on the client side, and starts a connection thread on the server side, when a multithreaded client accesses a server component. All client connections may be shared between all the client threads when communicating with the server. All server-side connection threads may be shared between all client connections.

Client LRMI Connection Pool

The client LRMI connection pool is maintained per server component; i.e. by each space partition. For each space partition, a client maintains a dedicated connection pool shared between all client threads accessing a specific partition. When multiple partitions (N) are hosted within the same GSC, a client may open maximum of N * com.gs.transport_protocol.lrmi.max-conn-pool connections against the GSC JVM process.

You may have to change the com.gs.transport_protocol.lrmi.max-conn-pool value (1024) to a smaller number, because the default value may be too high for application with multiple partitions.

Client total # of open connections = com.gs.transport_protocol.lrmi.max-conn-pool * # of partitions

This may causea a very large amount of connections to be opened on the client side, resulting in a Too many open files error. IF this occurs, increase the operating system’s max file descriptors amount by doing one of the following:

- Calling the following before running the client application (on UNIX):

ulimit -n 65536

- Lowering the

com.gs.transport_protocol.lrmi.max-conn-poolvalue.

Server LRMI Connection Thread Pool

The LRMI connection thread pool is a server-side component. This thread pool is in charge of executing the incoming LRMI invocations. It is a single thread pool within the JVM that executes all the invocations, from all the clients and replication targets.

Lookup Locators and Groups

A space (or any other service, such as a GSC or GSM) publishes (or registers/exports) itself within the Lookup Service. The Lookup Service (also called the service proxy) acts as the system directory service. It keeps information about each service, such as its location and its exposed remote methods. Every client or service needs to discover a Lookup Service as part of its bootstrap process.

There are two main options for how to discover a Lookup Service:

Via locator(s) - Unicast Discovery mode. A specific IP (or hostname) is used to indicate the machine running the Lookup Service. This option can be used when multicast communication is disabled on the network, or when you want to avoid the overhead involved with multicast discovery.

Via group(s) - Multicast Discovery mode. Relevant only when the network supports multicast. This is a “tag” you assign to the lookup. Clients that want to register with this Lookup Service, or search for a service proxy, need to use this specific group when discovering the Lookup Service.

To configure the XAP runtime components (GSA,GSC,GSM,LUS) to use Unicast discovery, set the XAP_LOOKUP_LOCATORS variable:

export XAP_LOOKUP_LOCATORS=MachineA,MachineB

./gs-agent.sh &

To configure the XAP runtime components (GSA,GSC,GSM,LUS) to use Multicast discovery, set the XAP_LOOKUP_GROUPS variable:

export XAP_LOOKUP_GROUPS=Group1,Group2

./gs-agent.sh &

When running multiple systems on the same network infrastructure, you should isolate them by having a dedicated set of Lookup Services (an GSC/GSM) for each system. Each system should have different locator/group settings.