Availability Biased

Overview

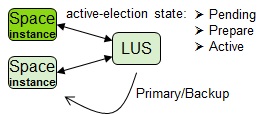

Space instances register themselves with the lookup service. Space instances go through an active-election process, discovering current instances and electing a primary Active-election is 3-phase procedure: pending, prepare, active

Split Brain and Primary Resolution

Split Brain Scenario

Split brain occurs when there are two or more primary instances running for the same partition. In most cases the reason for such behavior would be a network disruption that does not allow each space instance to communicate with all lookup services running. Usually you will have each space instance communicating with a different lookup service rather all of them (two in most cases).

During this split clients may communicate with each primary considering it as its master data copy updating the data within the space. If the active GSM also loosing connectivity with both lookup service it may provision a new backup.

Once the connection between all instances of a given partition and all lookup services occurs and a split brain identified (more than a single primary instance identified for a given partition) the system determines which instance will remain the primary and which will turn into a backup instance. The data within the instance that was elected to a backup will be dropped.

The primary resolution may involves few steps. Each tries to calculate the most recent primary and its data consistency level. If the first step can’t determine who is primary (tie) the second step executed (tie break). If the second step can’t determine who is primary the third one is executed (second tie break). Once a primary is elected the other instance moved into a backup mode and recover its entire data from the existing primary.

Data resolution occurs between two different clusters using the WAN gateway as a replication channel. In this case a conflict resolution is executed.

Primary Resolution

XAP provides built-in recovery policies after a split-brain has been detected. The default policy “discard-least-consistent” determines which of the two primaries should remain or be discarded. However, the “suspend-partition-primaries” policy suspends all interaction with the primaries until split-brain is resolved manually.

The recovery policy can be configured using the Space os-core:properties element. The value is one of the policy names. If none is specified, the default policy “discard-least-consistent” is applied.

<os-core:space id="space" url="/./space">

<os-core:properties>

<prop key="space-config.split-brain.recovery-policy.after-detection">

suspend-partition-primaries

</prop>

</os-core:properties>

</os-core:space>

Discard Least Consistent Policy

The primary which is found to be “least consistent” is discarded and XAP will instantiate it as a backup Space. The steps for reaching a conclusion are outlined.

Resolution - Step 1

Each primary is inspected by checking for multiple properties. As a result of this process an inconsistency ranking is calculated. The primary with the lowest ranking will be elected as the primary. If both primaries end up having the same inconsistency level, step two is executed.

The inconsistency level is calculated using the mirror’s active primary identity and various replication statistics. Since the mirror will not allow multiple primaries for the same partition it can be useful with the inconsistency level calculation.

Resolution - Step 2

Each primary is inspected for the exact time is was elected to be a primary. The election time is stored within the lookup service. All lookup services are inspected during this process. The one which has been elected to be a primary first will be elected to be the primary. If both primary have been elected in the same time or if time can’t be determined due to discreptencies in lookup service registration - step three is executed.

Resolution - Step 3

The system reviewing the primary instance names and choosing the one with the lowest lexical value to be the primary.

Suspend Partition Primaries Policy

Both primary Space instances are suspended (see Quiesce for reference), awaiting manual resolution.

Resolution - Step 1 Each primary upon discovery of a split-brain enters a Self-Quiesce mode, suspending all interaction with the Space. Only interactions of a proxy that is applied with the Quiesce token are allowed. The Quiesce token is the name of the Space (as apposed to the default auto-generated token) to ease coding logic via Admin API upon split-brain detection).

The logs, will show a message similar to:

... "Space instance [gigaSpace_container1:gigaSpace] is in Quiesce state until split-brain is resolved - Quiesce token [gigaSpace]"

Resolution - Step 2 Both primaries are now suspended and a manual intervention is needed in order to either choose the correct primary, extract data from the cluster, etc. These steps can either be done via CLI, UI or Admin API. With the Admin API you can be alerted of Split-brain detection.

//configure the alert

AlertManager alertManager = admin.getAlertManager();

alertManager.configure(new SpacePartitionSplitBrainAlertConfigurer().enable(true).create());

//be notified

alertManager.getAlertTriggered().add(new AlertTriggeredEventListener() {

public void alertTriggered(Alert alert) {

if (alert instanceof SpacePartitionSplitBrainAlert) {

... //do somthing

Space space = ... //obtain reference

GigaSpace gigaSpace = space.getGigaSpace();

gigaSpace.setQuiesceToken(new DefaultQuiesceToken(space.getName()));

... //do something with the gigaSpace

}

}

Resolution - Step 3 The last part of the manual resolution is to remove the unwanted primary instance. This is best achieved by restarting the instance either from UI or Admin API.

//obtain the processing unit instance reference

ProcessingUnitInstance instance = ...

instance.restartAndWait();

After that the remaining primary would need to un-Quiesced to allow incoming interactions. This can be done via CLI command or Admin API on the Processing Unit.

//obtain the Processing Unit reference

ProcessingUnit pu = ...

pu.unquiesce(new QuiesceRequest("split-brain resolved"));

Common Causes of Split-Brain

Below are the most common causes for Split-Brain scenarios and ways to detect them.

JVM Garbage Collection spikes - when a full GC happens it “stops-the-world” of both the GigaSpaces and application components (and holding internal clocks and timing) and external interactions.

- Using JMX or other monitoring tools you can monitor the JVM Garbage Collection activity. Once it gets into a full GC of longer than 30 seconds you should be alerted. You can use the Admin API and fetch the full GC events. If the GC takes more than 10 seconds, it will be logged as a warning in the GSM/GSC/GSA log file.

High (>90%) CPU utilization - As discussed, that can cause to various components (also external to GigaSpaces) to strive for CPU clock resources, such as keep alive mechanisms (which can miss events and therefore trigger initialization of redundant services or false alarms), IO/network lack of available sockets, OS fails to release resources etc. One should avoid getting into scenarios of constant (more than a minute) utilization of over 90% CPU.

- You can use the out-of-the-box CPU monitoring component (which uses SIGAR ) for measuring the OS and JVM resources. It is easily accessible through the GigaSpaces Admin API.

Network outages/disconnections - As discussed, disconnections between the GSMs or GSMs and GSCs can cause any of the GSMs to get into what is called “islands”.

- You should be using a network monitoring tool to monitor network outages/disconnections and re connections on machines which run the GSMs and GSCs. Such tool should report and alert on exact datetime of the event.

Islands

In events of network or failures, the system might get into unexpected behavior, also called Islands, which are extreme and need special handling. Here are two islands scenarios you might encounter:

- When a server running one of the GSMs that is managing some of the PUs is disconnected from the network, and later reconnects with the network. The GSM (that was still a primary) tried to redeploy the failed PUs. It depends which of the two GSMs is the primary at the point of disconnection. If the primary GSM is on the island with the GSCs, its backup GSM will become a primary until the network disconnection is resolved. When the network is repaired, the GSM will realize that the ‘former’ primary is still managing the services, and return to its backup state. But if it was the other way around - and the primary GSM lost connection with its PUs either due-to network disconnection or any other failure - it will behave as primary and try to redeploy as soon as a GSC is available. That will lead obviously into inconsistent mapping of services and an inconsistent system.

- A more complex form of “islands” would be if on both islands GSCs are available, leading both GSMs to behave as primaries and deploy the failed PUs. Reconciling at this point will need to take data integrity into account.

The solution for these scenarios would be to manually reconcile the cluster. Terminate the GSM, with only one remaining managing GSM, restart the GSCs hosting the backup space instances, and as a last step, start the second GSM (will be the backup GSM).