GigaSpaces Considerations

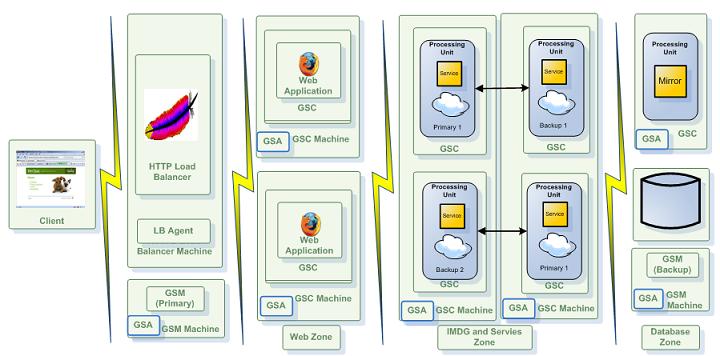

The Runtime Environment (GSA, LUS, GSM and GSC)

In a dynamic environment where you want to start GSCs and GSMs remotely, manually, or dynamically, the GSA is the only component that should be running on the machine hosting the GigaSpaces runtime environment. This lightweight service acts as an agent and starts a GSC![]() Grid Service Container.

This provides an isolated runtime for one (or more) processing unit (PU) instance and exposes its state to the GSM./GSM

Grid Service Container.

This provides an isolated runtime for one (or more) processing unit (PU) instance and exposes its state to the GSM./GSM![]() Grid Service Manager.

This is is a service grid component that manages a set of Grid Service Containers (GSCs). A GSM has an API for deploying/undeploying Processing Units. When a GSM is instructed to deploy a Processing Unit, it finds an appropriate, available GSC and tells that GSC to run an instance of that Processing Unit. It then continuously monitors that Processing Unit instance to verify that it is alive, and that the SLA is not breached./LUS

Grid Service Manager.

This is is a service grid component that manages a set of Grid Service Containers (GSCs). A GSM has an API for deploying/undeploying Processing Units. When a GSM is instructed to deploy a Processing Unit, it finds an appropriate, available GSC and tells that GSC to run an instance of that Processing Unit. It then continuously monitors that Processing Unit instance to verify that it is alive, and that the SLA is not breached./LUS![]() Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it. when needed.

Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it. when needed.

Plan the initial number of GSCs and GSMs based on the application memory footprint, and the amount of processing you might need. The most basic deployment should include 2 GSMs (running on different machines), 2 lookup services (running on different machines), and 2 GSCs (running on each machine). These host your data Grid or any other application components (services, web servers, Mirror![]() Performs the replication of changes to the target table or accumulation of source table changes used to replicate changes to the target table at a later time. If you have implemented bidirectional replication in your environment, mirroring can occur to and from both the source and target tables.) that you deploy.

Performs the replication of changes to the target table or accumulation of source table changes used to replicate changes to the target table at a later time. If you have implemented bidirectional replication in your environment, mirroring can occur to and from both the source and target tables.) that you deploy.

In general, the total amount of GSCs that should be running across the machines hosting the system depends on:

-

The amount of data you want to store in memory.

-

The JVM

Java Virtual Machine. A virtual machine that enables a computer to run Java programs as well as programs written in other languages that are also compiled to Java bytecode. maximum heap size.

Java Virtual Machine. A virtual machine that enables a computer to run Java programs as well as programs written in other languages that are also compiled to Java bytecode. maximum heap size. -

The processing requirements.

-

The number of users the system needs to serve.

-

The total number of CPU cores the machine is running.

The recommended number of GSCs a machine should host is half of the amount of total CPU cores, each having no more than a 10GB maximum heap size.

Configuring the Runtime Environment

JVM parameters (system properties, heap settings, etc.) that are shared between all components are best set using the GS_OPTIONS_EXT environment variable. Specific GSA![]() Grid Service Agent.

This is a process manager that can spawn and manage Service Grid processes (Operating System level processes) such as The Grid Service Manager, The Grid Service Container, and The Lookup Service. Typically, the GSA is started with the hosting machine's startup. Using the agent, you can bootstrap the entire cluster very easily, and start and stop additional GSCs, GSMs and lookup services at will. JVM parameters can be easily passed using

Grid Service Agent.

This is a process manager that can spawn and manage Service Grid processes (Operating System level processes) such as The Grid Service Manager, The Grid Service Container, and The Lookup Service. Typically, the GSA is started with the hosting machine's startup. Using the agent, you can bootstrap the entire cluster very easily, and start and stop additional GSCs, GSMs and lookup services at will. JVM parameters can be easily passed using GS_GSA_OPTIONS that is appended toGS_GSA_OPTIONS, GS_GSM_OPTIONS, GS_GSC_OPTIONS, GS_LUS_OPTIONS) to the GSA script or a wrapper script, and the values will be passed to the corresponding components.

#Wrapper Script

export GS_GSA_OPTIONS='-Xmx256m'

export GS_GSC_OPTIONS='-Xmx2048m'

export GS_GSM_OPTIONS='-Xmx1024m'

export GS_LUS_OPTIONS='-Xmx1024m'

#call gs-agent.sh

. ./gs-agent.sh

@rem Wrapper Script

@set GS_GSA_OPTIONS=-Xmx256m

@set GS_GSC_OPTIONS=-Xmx2048m

@set GS_GSM_OPTIONS=-Xmx1024m

@set GS_LUS_OPTIONS=-Xmx1024m

@rem call gs-agent.bat

call gs-agent.bat

The above LUS configuration will serve up to 50 partitions running on 100 GSCs. For larger environments, you must increase the heap size and perform GC tuning.

Running Multiple Groups

You may have a set of LUS/GSM managing GSCs associated to a specific group. To "break" your network into 2 groups, start the GigaSpaces runtime environment as follows:

export GS_LOOKUP_GROUPS=GroupX

gs-agent.sh --lus=1

export GS_LOOKUP_GROUPS=GroupX

gs-agent.sh --gsc=4

export GS_LOOKUP_GROUPS=GroupX

gs-agent.sh --lus=1 --gsm=1

export GS_LOOKUP_GROUPS=GroupY

gs-agent.sh --gsc=2

export GS_LOOKUP_GROUPS=GroupX

gs deploy-space -cluster schema=partitioned total_members=4 spaceX

export GS_LOOKUP_GROUPS=GroupY

gs deploy-space -cluster schema=partitioned total_members=2 spaceY

Running Multiple Locators

You may have a set of LUS/GSM managing GSCs associated to a specific locator. To "break" your network into 2 groups using different lookup locators, start the GigaSpaces runtime environment as follows:

export GS_LUS_OPTIONS=-Dcom.sun.jini.reggie.initialUnicastDiscoveryPort=8888 exportGS_LOOKUP_LOCATORS=127.0.0.1:8888 exportGS_OPTIONS_EXT=-Dcom.gs.multicast.enabled=false gs-agent.sh --lus=1 --gsm=1

export GS_LOOKUP_LOCATORS=127.0.0.1:8888 exportGS_OPTIONS_EXT=-Dcom.gs.multicast.enabled=false gs-agent.sh --gsc-4

export GS_LUS_OPTIONS=-Dcom.sun.jini.reggie.initialUnicastDiscoveryPort=9999 export GS_LOOKUP_LOCATORS=127.0.0.1:8888 exportGS_OPTIONS_EXT=-Dcom.gs.multicast.enabled=false gs-agent.sh --lus=1 --gsm=1

export GS_LOOKUP_LOCATORS=127.0.0.1:9999 exportGS_OPTIONS_EXT=-Dcom.gs.multicast.enabled=false gs-agent.sh --gsc=2

export GS_LOOKUP_LOCATORS=127.0.0.1:8888

gs deploy-space -cluster schema=partitioned total_members=4 spaceX

export GS_LOOKUP_LOCATORS=127.0.0.1:9999

gs deploy-space -cluster schema=partitioned total_members=2 spaceY

In addition to the Lookup Service, there is an alternative way to export the Space![]() Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. proxy, via the RMI registry (JNDI). It is started by default within any JVM running a GSC/GSM. By default, the port used is 10098 and above. This option should be used only in special cases where there is no way to use the default Lookup Service. Since this is the usual RMI registry, it suffers from known problems, such as being non-distributed, non-highly-available, etc.

Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. proxy, via the RMI registry (JNDI). It is started by default within any JVM running a GSC/GSM. By default, the port used is 10098 and above. This option should be used only in special cases where there is no way to use the default Lookup Service. Since this is the usual RMI registry, it suffers from known problems, such as being non-distributed, non-highly-available, etc.

The Lookup Service runs by default as a standalone JVM process started by the GSA. You can also embed it to run together with the GSM. In general, you should run two Lookup Services per system. Running more than two Lpokup Services may cause increased overhead due to the chatting and heartbeat mechanism performed between the services and the lookup service, to signal the existence of the service.

Zones

Zones allows you to "label" a running GSC(s) before starting it. The zone should be used to isolate applications and a data grid running on the same network. It has been designed to allow users to deploy a processing unit![]() This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. to specific set of GSCs, where they all share the same set of LUSs and GSMs.

This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. to specific set of GSCs, where they all share the same set of LUSs and GSMs.

The Zone property can be used for example to deploy your data grid into a specific GSC(s) labeled with specific zone(s). The zone is specified prior to the GSC startup, and cannot be changed after the GSC has been started.

Verify that you have an adequate number of GSCs running before deploying an application whose SLA specifies a specific zone.

To use zones when deploying your PU![]() This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. you should:

This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. you should:

exportGS_OPTIONS_EXT=-Dcom.gs.zones=webZone ${GS_OPTIONS_EXT} gs-agent --gsc=2

gs deploy -zones webZone myWar.war

Running Multiple Zones

You may have a set of LUS/GSM managing multiple zones (recommended) or have a separate LUS/GSM set per zone. If you have a set of LUS/GSM managing multiple zones, you should run them as follows:

gs-agent.sh --lus=1 --gsm=1

exportGS_OPTIONS_EXT=-Dcom.gs.zones=zoneX ${GS_OPTIONS_EXT} gs-agent.sh --gsc=4

exportGS_OPTIONS_EXT=-Dcom.gs.zones=zoneY ${GS_OPTIONS_EXT} gs-agent.sh --gsc=2

Runtime File Location

GigaSpaces generates some files while the system is running. You can change the location of the generated files using the following system properties:

| System Property | Description | Default |

|---|---|---|

| com.gigaspaces.logger.RollingFileHandler.filename-pattern | The location of log files and their file pattern. | <GS_HOME>\logs

|

| com.gs.deploy | The location of the deploy directory of the GSM. | <GS_HOME>\deploy

|

| com.gs.work | The location of the work directory of the GSM and GSC. Due to the fact that this directory is critical to the system proper function, it should be set to a local storage in order to avoid failure in case of network failure when a remote storage is used. | <GS_HOME>\work

|

| user.home | The location of system defaults config. Used by the GigaSpaces Management Center, and runtime system components. | |

| com.gigaspaces.lib.platform.ext | PUs shared classloader libraries folder. PU jars located within this folder loaded once into the JVM system classloader and shared between all the PU instances classloaders within the GSC. In most cases this is a better option than the com.gs.pu-common for JDBC |

<GS_HOME>\lib\platform\ext

|

| com.gs.pu-common | The location of common classes used across multiple processing units. The libraries located within this folder loaded into each PU instance classloader (and not into the system classloader as with the com.gigaspaces.lib.platform.ext. |

<GS_HOME>\lib\optional\pu-common

|

| com.gigaspaces.grid.gsa.config-directory | The location of the GSA configuration files. The GigaSpaces Agent (GSA) manages different process types. Each process type is defined within this folder in an xml file that identifies the process type by its name. | <GS_HOME>\config\gsa

|

| java.util.logging.config.file | It indicates file path to the Java logging file location. Use it to enable finest logging troubleshooting of various GigaSpaces Services. You may control this setting via the GS_LOGS_CONFIG_FILE environment variable. |

<GS_HOME>\config\log\xap_logging.properties

|

You can use com.gigaspaces.lib.platform.ext and the com.gs.pu-common to decrease the deployment time if your processing unit contains many third-party JAR files. In this case, each GSC will download the processing unit JAR file (along with all the JARs it depends on) to its local working directory from the GSM. In large deployments spanning tens or hundreds of GSCs, this can be very time consuming. In these cases, consider placing the JARs on which your processing unit depends in a shared location on your network, and then point the com.gs.pu-common or com.gigaspaces.lib.platform.ext directory to this location.

PU Packaging and CLASSPATH

User PU Application Libraries

A Processing Unit JAR file, or a Web Application WAR file should include (within its lib folder) all the necessary JARs required for the application. Resource files should be placed within one of the JAR files within the PU JAR, located under the lib folder. In addition, the PU JAR should include the pu.xml within the META-INF\spring folder.

In order to close LRMI threads when closing application, use:LRMIManager.shutdown().

Data Grid PU Libraries

When deploying a data grid PU, it is recommended to include all space classes and their dependency classes as part a PU JAR file. This PU JAR file should include a pu.xml within the META-INF\spring, to include the space declarations and relevant tuning parameters.

GigaSpaces Management Center Libraries

The GigaSpaces Management Center has been deprecated and will be removed in a future release.

It is recommended to include all space classes and their dependency classes as part of the GS-UI CLASSPATH . This ensures that you can query the data via the GigaSpaces Management Center. To set the GigaSpaces Management Center classpath, set the POST_CLASSPATH variable prior to calling the GS-UI script to have the application JARs locations.

To avoid having to load the same library into each PU instance classloader running within the GSC, you should place common libraries (such as JDBC driver, logging libraries, Hibernate libraries and their dependencies) in the $GS_HOME\lib\optional\pu-common folder. You can specify the location of this folder using the com.gs.pu-common system property.

Space Memory Management

The Space supports two memory management modes:

-

ALL_IN_CACHE- this assumes all application data is stored within the space. -

LRU- this assumes some of the application data is stored within the space, and all the rest Last Recently Used.

This is a common caching strategy. It defines the policy to evict elements from the cache to make room for new elements when the cache is full, meaning it discards the least recently used items first.

Last Recently Used.

This is a common caching strategy. It defines the policy to evict elements from the cache to make room for new elements when the cache is full, meaning it discards the least recently used items first. REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. is stored in some external data source.

REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. is stored in some external data source.The eviction policy mechanism is deprecated and will be removed in a future release. To prevent scenarios where the available physical memory is limited consider using the MemoryXtend module, which supports using external storage for Space data.

When running with ALL_IN_CACHE, the memory management does the following:

-

Stops clients from writing data into the space when the JVM utilized memory crosses the WRITE threshold (percentage of the heap max size).

-

Throws a

MemoryShortageExecptionback to the client when the JVM utilized memory crosses thehigh_watermark_percentagethreshold.

When running with ALL_IN_CACHE, ensure that the default memory management parameters are tuned according the JVM heap size. A large heap size (over 2GB RAM) requires special attention. Here is an example of memory manager settings for a 10GB heap size:

<os-core:embedded-space id="space" space-name="mySpace" >

<os-core:properties>

<props>

<prop key="space-config.engine.memory_usage.high_watermark_percentage">95</prop>

<prop key="space-config.engine.memory_usage.write_only_block_percentage">94</prop>

<prop key="space-config.engine.memory_usage.write_only_check_percentage">93</prop>

<prop key="space-config.engine.memory_usage.low_watermark_percentage">92</prop>

</props>

</os-core:properties>

</os-core:embedded-space>

Distributing the Primary Spaces

By default, when running GSCs on multiple machines and deploying a Space with backups, GigaSpaces tries to provision primary Spaces to all available GSCs across all the machines.

The max-instances-per-vm and the max-instances-per-machine deploy parameters should be set when deploying your data grid, to determine how the deployed Processing Unit (e.g. Space) is provisioned into the different running GSCs.

The number of backups per partition is zero or one.

Without setting the max-instances-per-vm and the max-instances-per-machine, GigaSpaces might provision a primary and a backup instance of the same partition into GSCs running on the same physical machine. To avoid this behavior, set max-instances-per-vm=1 and max-instances-per-machine=1. This ensures that the primary and backup instances of the same partition are provisioned into different GSCs running on different machines. If there is one machine running GSCs and max-instances-per-machine=1, backup instances are not provisioned.

Here is an example of how to deploy a data grid with 4 partitions, with a backup per partition (total of 8 Spaces), with 2 Spaces per GSC, and the primary and backup running on different machines (even when you have other GSCs running):

gs deploy-space -cluster schema=partitioned-sync2backup total_members=4,1

-max-instances-per-vm 2 -max-instances-per-machine 1 MySpace

Log Files

GigaSpaces generates log files for each running component . This includes the GSA, GSC, GSM, Lookup Service and client-side components. By default, the log files are created within the <GS_HOME>\logs folder. After some time, you may end up with a large number of files that are difficult to maintain and search. it is recommended to back up or delete old log files. You can use the logging backup policy to manage your log files.

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.