GigaSpaces Manager

The GigaSpaces Manager (or simply The Manager) is a component that stacks together the LUS and GSM along with Apache ZooKeeper and an embedded web application which hosts an admin instance with a RESTful management API on top of it.

In addition to simplifying setup and management, the Manager also provides the following benefits:

-

Space

Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. leader election uses Apache Zookeeper

Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. leader election uses Apache Zookeeper Apache Zookeeper. An open-source server for highly reliable distributed coordination of cloud applications. It provides a centralized service for providing configuration information, naming, synchronization and group services over large clusters in distributed systems. The goal is to make these systems easier to manage with improved, more reliable propagation of changes. instead of the LUS

Apache Zookeeper. An open-source server for highly reliable distributed coordination of cloud applications. It provides a centralized service for providing configuration information, naming, synchronization and group services over large clusters in distributed systems. The goal is to make these systems easier to manage with improved, more reliable propagation of changes. instead of the LUS Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it., providing a more robust process (consistent when network partitions occur), and eliminating split brain.

Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it., providing a more robust process (consistent when network partitions occur), and eliminating split brain. -

When using MemoryXtend

Related to Data Tiering. The MemoryXtend (blobstore) storage model allows an external storage medium (one that does not reside on the JVM heap) to store the GigaSpaces Space data and is designed for operational workloads. It keeps all indexes in RAM for better performance., the last primary will automatically be stored in Apache Zookeeper (instead of having to set up a shared NFS and configure the Processing Unit

Related to Data Tiering. The MemoryXtend (blobstore) storage model allows an external storage medium (one that does not reside on the JVM heap) to store the GigaSpaces Space data and is designed for operational workloads. It keeps all indexes in RAM for better performance., the last primary will automatically be stored in Apache Zookeeper (instead of having to set up a shared NFS and configure the Processing Unit This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. to use it).

This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. to use it). -

The GSM

Grid Service Manager.

This is is a service grid component that manages a set of Grid Service Containers (GSCs). A GSM has an API for deploying/undeploying Processing Units. When a GSM is instructed to deploy a Processing Unit, it finds an appropriate, available GSC and tells that GSC to run an instance of that Processing Unit. It then continuously monitors that Processing Unit instance to verify that it is alive, and that the SLA is not breached. uses Apache Zookeeper for leader election (instead of the active-active topology used today). This provides a more robust process (consistent when network partitions occur). Also, having a single leader GSM means that the general behaviour is more deterministic and logs are easier to read.

Grid Service Manager.

This is is a service grid component that manages a set of Grid Service Containers (GSCs). A GSM has an API for deploying/undeploying Processing Units. When a GSM is instructed to deploy a Processing Unit, it finds an appropriate, available GSC and tells that GSC to run an instance of that Processing Unit. It then continuously monitors that Processing Unit instance to verify that it is alive, and that the SLA is not breached. uses Apache Zookeeper for leader election (instead of the active-active topology used today). This provides a more robust process (consistent when network partitions occur). Also, having a single leader GSM means that the general behaviour is more deterministic and logs are easier to read. -

REST API

REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. for managing the environment remotely from any platform.

REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. for managing the environment remotely from any platform.

Getting Started

The easiest way to get started is to run a standalone Manager on your machine - simply run the following command:

./gs.sh host run-agent --autogs.bat host run-agent --autoIn the Manager log file ($GS_HOME/logs), you can see:

-

The Manager has started the LUS, Zookeeper, GSM and REST

REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. API (and various other details about them).

REpresentational State Transfer. Application Programming Interface

An API, or application programming interface, is a set of rules that define how applications or devices can connect to and communicate with each other. A REST API is an API that conforms to the design principles of the REST, or representational state transfer architectural style. API (and various other details about them). -

Apache Zookeeper files reside in

$GS_HOME/work/manager/zookeeper. -

The REST API is started on localhost:8090 .

The local Manager is intended for local use on the developer's machine, so it binds to localhost and is not accessible from other machines. If you want to start a Manager and access it from other hosts (remote access), follow the procedure described in High Availability below with a single host.

High Availability

In a production environment, you'll probably want a cluster of Managers on multiple hosts to ensure high availability. You'll need 3 machines (an odd number is required to ensure a quorum during network partitions). For example, suppose you’ve selected machines alpha, bravo and charlie to host the managers:

-

Edit the

$GS_HOME/bin/setenv-overrides.sh/batscript and setGS_MANAGER_SERVERSto the list of hosts. For example:export GS_MANAGER_SERVERS=alpha,bravo,charlie/ -

Copy the modified

setenv-overrides.sh/batto each machine that runs a GigaSpaces Agent. -

Run

./gs.sh[bat] host run-agent --autoon the manager machines (alpha, bravo, and charlie in this case).

Starting more than one Manager on the same host is not supported.

Configuration

Ports

The following ports can be modified using system properties, e.g. via the setenv-overrides script located in $GS_HOME/bin:

| Port | System Property | Default |

|---|---|---|

| REST | com.gs.manager.rest.port

|

8090 |

| Zookeeper | com.gs.manager.zookeeper.discovery.port

com.gs.manager.zookeeper.leader-election.port

com.gs.zookeeper.client.port

|

2888 3888 2181 |

| Lookup Service | com.gs.multicast.discoveryPort

|

4174 |

Apache Zookeeper requires that each Manager can reach the other Managers. If you change the Apache Zookeeper ports, make sure you use the same port on all machines. If that is not possible for some reason, you may specify the ports via the GS_MANAGER_SERVERS environment variable. For example:

GS_MANAGER_SERVERS="alpha;zookeeper=2000:3000;lus=4242,bravo;zookeeper=2100:3100,charlie;zookeeper=2200:3200"

When using this syntax in Unix/Linux systems, make sure to wrap it in quotes (as shown), because of the semi-colons.

Configuring manager servers includes LUS definitions. When you define GS_MANAGER_SERVERS, do not define GS_LOOKUP_LOCATORS.

Zookeeper client port can also be modified by declaring the environment variable GS_ZOOKEEPER_CLIENT_PORT and also through the Zookeeper config file located at config/zookeeper/zoo.cfg.

If defining more than one property, the order of priorities (highest to lowest) is -- java property, environment variable, config file.

When using the Admin API standalone client, you can configure the port using the Java system property or the environmental variable.

HSQLDB Configuration

GigaSpaces Manager stores statistical data in an HSQLDB![]() HSQLDB is a relational database management system written in Java. It has a JDBC driver and supports a large subset of SQL-92, SQL:2008, SQL:2011, and SQL:2016 standards. database. The data is held for a predefined period of time, in order to show related metrics in the Ops Manager.

HSQLDB is a relational database management system written in Java. It has a JDBC driver and supports a large subset of SQL-92, SQL:2008, SQL:2011, and SQL:2016 standards. database. The data is held for a predefined period of time, in order to show related metrics in the Ops Manager.

It is important to monitor the size of the HSQLDB database ({GS_HOME}/work/db/metricsdb.data), and change the data retention policy if necessary.

GigaSpacesprovides reasonable default values for the retention policy. Depending on cluster size, and number of types and indexes, these default values may not remove old data fast enough from the database, causing the database to increase in size on disk, and possibly also causing internal database memory issues.

The HSQLDB port can be set as follows:-Dcom.gs.ui.metrics.db.port=9101 (default is 9101)-Dcom.gs.ui.query-timeout=700 -Dcom.gs.ui.metrics.db.host=DBHost (usually no need to change)-Dcom.gs.ui.metrics.db.name=metricsdb

The retention policy for this data can be configured as follows:

-

How long to keep data in the database:

-Dcom.gs.ui.metrics.db.retention.retain-duration=PT10M(default is ten minutes) -

How often to run the delete task:

-Dcom.gs.ui.metrics.db.retention.delay-duration=PT1M(default is one minute) -

How many rows to delete each time:

-Dcom.gs.ui.metrics.db.retention.batch-size=20000(default is 20,000)

Disabling HSQLDB Usage

If desired, HSQLDB usage can be disabled as follows:

-Dcom.gs.hsqldb.all-metrics-recording.enabled=false

-Dcom.gs.ops-ui.enabled=false

Apache Zookeeper

Apache ZooKeeper's behavior is governed by its configuration file (zoo.cfg).

When using GigaSpaces Manager, an embedded Zookeeper instance is started using a default configuration located at $GS_HOME/config/zookeeper/zoo.cfg.

If you need to override the default settings, either edit the default file, or use the GS_ZOOKEEPER_SERVER_CONFIG_FILE environment variable or the com.gs.zookeeper.config-file system property to point to your custom configuration file.

The default Zookeeper port is 2181.

For more information about Apache Zookeeper configuration, see ZooKeeper configuration.

Zookeeper Configuration File

The ZooKeeper configuration file zoo.cfg is preset with the following parameters.

| Property | Description | Value |

|---|---|---|

| tickTime | Time unit used by ZooKeeper, in milliseconds. | 1000 |

| initLimit | Amount of time, in ticks, to allow followers to connect and sync to a leader. | 10 |

| syncLimit | Amount of time, in ticks, to allow followers to sync with ZooKeeper. | 10 |

| clientPort | The port to listen for client connections; the port that clients attempt to connect to. | 2181 |

| maxSessionTimeout | The maximum session timeout that the server will allow the client to negotiate, in milliseconds. | 60000 |

| autopurge | Automatic purging of the snapshots and corresponding transaction logs. | enabled by purgeInterval > 0 |

| autopurge.purgeInterval | The time interval for which the purge task has to be triggered (zero to disable), in hours. | 1 |

| autopurge.snapRetainCount | Retains the most recent snapshots and the corresponding transaction logs and deletes the rest. | 3 |

ZooKeeper Client

The Manager stack uses the ZooKeeper leader election to select a leader among the Grid Service Managers. The leader GSM will act as the managing (active) GSM of the deployed Processing Units. The ZooKeeper quorum ensures that there will only be one elected Manager. In the absence of a quorum, and until a GSM is elected leader, the GSMs will only monitor the cluster. As a participant of the ZooKeeper leader election, the GSM is configurable using the following properties:

| System Property | Default |

|---|---|

com.gs.manager.leader-election.zookeeper.connection-timeout

|

5000 |

com.gs.manager.leader-election.zookeeper.session-timeout

|

15000 |

com.gs.manager.leader-election.zookeeper.retry-timeout

|

Integer.MAX_VALUE |

com.gs.manager.leader-election.zookeeper.retry-interval

|

100 |

Secured ZooKeeper

You can set up secured ZooKeeper as follows:

-

Create keystore and truststore:

keytool -genkeypair \ -alias mykey \ -keyalg RSA \ -keysize 2048 \ -validity 3650 \ -keystore zk-keystore.p12 \ -storetype PKCS12 \ -storepass password \ -keypass password \ -dname "CN=localhost"

keytool -exportcert \ -alias mykey \ -keystore zk-keystore.p12 \ -storepass password \ -rfc \ -file mycert.crt

keytool -importcert \ -alias trustedcert \ -file mycert.crt \ -keystore zk-truststore.p12 \ -storetype PKCS12 \ -storepass password \ -noprompt

-

Set up ZooKeeper configuration file zookeeper-server.cfg as follows:

clientPort=2181 # ZK server listens for incoming secured TLS Transport Layer Security, or TLS, is a widely adopted security protocol designed to facilitate privacy and data security for communications over the Internet. A primary use case of TLS is encrypting the communication between web applications and servers. connections from clients

secureClientPort=2281

# Decides whether TLS should be ON or OFF for the ZK server

ssl=true

sslQuorum=true

serverCnxnFactory=org.apache.zookeeper.server.NettyServerCnxnFactory

ssl.keyStore.location=../config/zookeeper/zk-keystore.p12

ssl.keyStore.password=password

ssl.keyStore.type=PKCS12

ssl.trustStore.location=../config/zookeeper/zk-truststore.p12

ssl.trustStore.password=password

ssl.quorum.keyStore.location=../config/zookeeper/zk-keystore.p12

ssl.quorum.keyStore.password=password

ssl.quorum.trustStore.location=../config/zookeeper/zk-truststore.p12

ssl.quorum.trustStore.password=password

# Allow quorum TLS connections with mismatched hostnames

ssl.quorum.hostnameVerification=false

Transport Layer Security, or TLS, is a widely adopted security protocol designed to facilitate privacy and data security for communications over the Internet. A primary use case of TLS is encrypting the communication between web applications and servers. connections from clients

secureClientPort=2281

# Decides whether TLS should be ON or OFF for the ZK server

ssl=true

sslQuorum=true

serverCnxnFactory=org.apache.zookeeper.server.NettyServerCnxnFactory

ssl.keyStore.location=../config/zookeeper/zk-keystore.p12

ssl.keyStore.password=password

ssl.keyStore.type=PKCS12

ssl.trustStore.location=../config/zookeeper/zk-truststore.p12

ssl.trustStore.password=password

ssl.quorum.keyStore.location=../config/zookeeper/zk-keystore.p12

ssl.quorum.keyStore.password=password

ssl.quorum.trustStore.location=../config/zookeeper/zk-truststore.p12

ssl.quorum.trustStore.password=password

# Allow quorum TLS connections with mismatched hostnames

ssl.quorum.hostnameVerification=false -

Set up zoo-client.cfg file:

# Secure client connection port secureClientPort=2281 # SSL/TLS settings ssl=true zookeeper.client.secure=true zookeeper.sasl.client=false zookeeper.clientCnxnSocket=org.apache.zookeeper.ClientCnxnSocketNetty zookeeper.ssl.keyStore.location=../config/zookeeper/zk-keystore.p12 zookeeper.ssl.keyStore.password=password zookeeper.ssl.keyStore.type=PKCS12 zookeeper.ssl.trustStore.location=../config/zookeeper/zk-truststore.p12 zookeeper.ssl.trustStore.password=password # disables hostname verification on the client side when connecting to a ZooKeeper server over TLS/SSL zookeeper.ssl.hostnameVerification=false

:zoo-client.cfg was added with version 17.1.2. The default file location is next to zoo.cfg - $GS_HOME/config/zookeeper/zoo-client.cfg.

The default ZooKeeper client configuration file path can be changed by setting the ZOOKEEPER_CLIENT_CONFIG_FILE environment variable, or by using the system property com.gs.zookeeper.client.config-file.

Backwards Compatibility

The Manager is offered side-by-side with the existing stack (GSM, LUS, etc.). We think this is a better way of working with GigaSpaces, and we want new users and customers to work solely with it. On the same note we understand that it requires some effort from existing users which upgrade to 12.1 (probably not too much, mostly on changing the scripts they use to start the environment), so if you’re upgrading for bug fixes/other features and don’t want the manager for now, you can switch from 12.0 to 12.1 and continue using the old components - it’s all still there.

The Manager uses a different selection strategy when selecting resources where to deploy a Processing Unit instance. The strategy is to choose the container with the least relative weight. This is achieved by calculating the relative weight of each container in regards to other containers. Prior to 12.1, the strategy was to calculate the weight of a container based on gathering remote state. In large deployments, the network overhead and the overall deployment time is costly. We can achieve almost the same behavior with the new strategy.

You may experience a different instance distribution than before. Although in both strategies we take a "best-effort" approach, in some cases it may still be an uneven distribution due to simultaneous selection process.

To change between selector strategies, use the following system property (org.jini.rio.monitor.serviceResourceSelector). For example, to set the strategy to the on prior to 12.1, assign the following when loading the manager (in GS_MANAGER_OPTIONS environment variable):

-Dorg.jini.rio.monitor.serviceResourceSelector=org.jini.rio.monitor.WeightedSelector

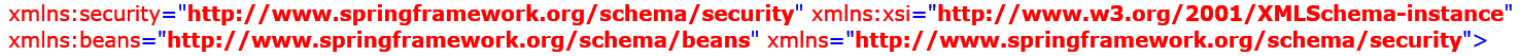

Regarding the Spring Profile:

Two profiles have been added to the Ops Manager UI: gs-ops-manager-secured and gs-ops-manager (default). These profiles are defined in the war file $GS_HOME$/lib/platform/manager/webapps/V2.war. Inside the war file, the profiles are located in WEB-INF/spring/spring-security.xml, as follows:

If you are using a system property to set the active Spring profile, please take these profiles into consideration.

FAQ

Q. Why do I need 3 Managers? In previous versions 2 LUS + 2 GSM was enough for high availability.

With an even number of managers, consistency cannot be assured in case of a network partition, hence the need for 3 Managers.

Q. I want higher availability - can I use 5 Managers instead of 3?

Theoretically this is possible (Apache Zookeeper supports this), but currently this is not supported in GigaSpaces - starting 5 managers would also start 5 Lookup Services, which will lead to excessive chatiness and performance drop. This issue is in our backlog, though - if it's important for you please contact support or your sales rep to vote it up.

Q. Can I use a load balancer in front of the REST API?

Yes. However, make sure to use sticky sessions, as some of the operations (e.g. upload/deploy) take time to propagate to the other Managers.

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.