The Lookup Service

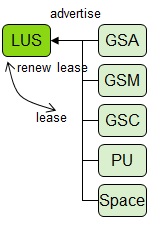

The Lookup Service (LUS![]() Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it.) provides a leased based registry holding Service Grid

Lookup Service.

This service provides a mechanism for services to discover each other. Each service can query the lookup service for other services, and register itself in the lookup service so other services may find it.) provides a leased based registry holding Service Grid![]() A built-in orchestration tool which contains a set of Grid Service Containers (GSCs) managed by a Grid Service Manager. The containers host various deployments of Processing Units and data grids. Each container can be run on a separate physical machine. This orchestration is available for XAP only. level services advertised on it. Some of the services exposed on the LUS are GigaSpaces Agent, GigaSpaces Manager, GigaSpaces Container, Space

A built-in orchestration tool which contains a set of Grid Service Containers (GSCs) managed by a Grid Service Manager. The containers host various deployments of Processing Units and data grids. Each container can be run on a separate physical machine. This orchestration is available for XAP only. level services advertised on it. Some of the services exposed on the LUS are GigaSpaces Agent, GigaSpaces Manager, GigaSpaces Container, Space![]() Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. Instances (actual instances of a Space that form a topology), and Processing Unit

Where GigaSpaces data is stored. It is the logical cache that holds data objects in memory and might also hold them in layered in tiering. Data is hosted from multiple SoRs, consolidated as a unified data model. Instances (actual instances of a Space that form a topology), and Processing Unit![]() This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. Instances (actual instances of a deployed Processing Unit).

This is the unit of packaging and deployment in the GigaSpaces Data Grid, and is essentially the main GigaSpaces service. The Processing Unit (PU) itself is typically deployed onto the Service Grid. When a Processing Unit is deployed, a Processing Unit instance is the actual runtime entity. Instances (actual instances of a deployed Processing Unit).

A Lookup Service creates a virtualized isolated environment by utilizing lookup groups (when using multicast) or lookup locators (when using unicast). When starting the LUS and other runtime components GigaSpaces Agent, GigaSpaces Manager and GigaSpaces Container, the lookup groups / lookup locators can be set in order to form an isolated environment.

In a multicast enabled environment, the lookup groups can be set using either the LOOKUPGROUPS environment variable (when using scripts), or by setting -Dcom.gs.jini_lus.groups system property.

In a unicast environment (where multicast is disabled), the lookup locators can be set using either LOOKUPLOCATORS environment variable (when using scripts), or by setting -Dcom.gs.jini_lus.locators system property. In a unicast environment, the LUS are started on specific machines (usually two LUS instances), and the lookup locators simply point to the two hosts the LUS instances are running on.

When a Container is started with a specific lookup groups / lookup locators, any Processing Unit instance running within it (and Space instances as well) will inherit the configuration and join the virtualized LUS environment.

Registering and Using a Service

The following diagram shows how a service provider registers a service with the Lookup Service, and how a client subsequently locates the service at the Lookup Service and begins working with the service.

The service proxy is copied by the provider to the Lookup Service at registration. If the client decides to use the service, he downloads the service proxy and invokes the service by calling the methods of its proxy interface.

Lease-based Registration

|

Every Service Grid component is registered with the Lookup Service (GSC |

|

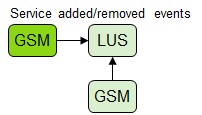

GSM Registration

|

GSMs advertise and discover each other via Lookup Service. When GSM starts, it recovers from all peer GSMs. GSMs are active-active, managing deployments or monitoring other deployments. If managing GSM of a specific processing unit has terminated, one of the monitoring GSMs becomes the new "manager' for this specific processing unit. |

|

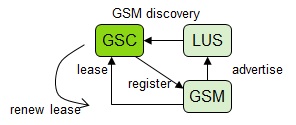

GSC Registration

|

All GSCs advertise themselves on the Lookup Service. Once these are registered they are discovered by the existing running GSMs. A lease is granted upon registration, and renewals are maintained between the GSC and the GSM. A GSC that has failed to renew it's lease will attempt to re-register with the GSM. A GSM that detects that a GSC has failed to renew, will remove the GSC from its list of available resources. Processing Unit instances are not affected by this. |

|

Space Instance Registration

|

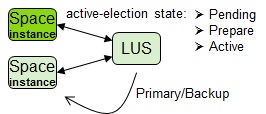

Space instances register themselves with the lookup service. Space instances go through an active-election process, discovering current instances and electing a primary Active-election is 3-phase procedure: pending, prepare, active |

|

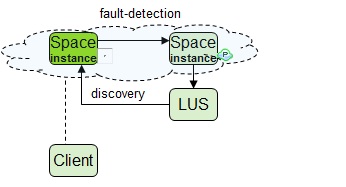

Space Instance Failure Detection

|

A backup Space instance maintains a fault-detection mechanism to its primary counterpart. If failure of a primary occurs, backup goes through an election process to become primary. Clients discover Space instances via lookup service. Client Cluster Proxy monitors the liveness of each cluster member |

|

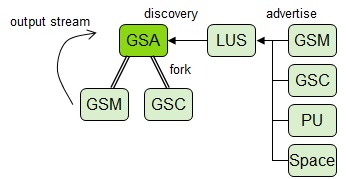

GSA - Process Monitoring

|

GSA |

|

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.

In-Memory Data Grid - achieve unparalleled speed, persistence, and accuracy.