Deploying onto the Grid

Deploying your processing unit to the service grid is the preferred way to run in your production environment. The service grid provides the following main benefits to every processing unit deployed onto it:

-

Automatic distribution and provisioning of the processing unit instances: When deploying to the service grid the GigaSpaces Manager identifies the relevant GigaSpaces Containers and takes care of distributing the processing unit binaries to them. You do not need to manually install the processing unit anywhere on the cluster - only into the service grid.

-

SLA enforcement: The GigaSpaces Manager is also responsible for enforcing your processing unit's Service Level Agreement, or SLA. At deployment time, it will create a specified number of processing unit instances (based on the SLA) and provision them to the running containers while enforcing all the deployment requirements, such as memory and CPU utilization, or specific deployment zones. At runtime, it will monitor the processing unit instances, and if any of them fail to fulfill the SLA or become unavailable it will re-instantiate the processing unit automatically on another container.

You can use the GigaSpaces Universal Deployer to deploy complex multi processing unit applications.

The Deployment Process

Once built according to the processing unit directory structure, the processing unit can be deployed via the various deployment tools available in GigaSpaces (UI, CLI, Ant, Maven or the Admin API).

After you package the processing unit and deploy it via one of the deployment tools, the deployment tool uploads it to all the running GSMs, where it is extracted and provisioned to the GSCs.

The recommended way to deploy the processing unit is by packaging it into a .jar or a .zip archive and specifying the location of the packaged file to the deployment tool in use.

However, GigaSpaces also supports the deployment of exploded processing units. (The deployment tool will package the processing unit directories into a jar file automatically). Another option to deploy a processing unit is by placing the exploded processing unit under the deploy directory of each of the GSMs and issuing a deploy command with the processing unit name (the name of the directory under the deploy directory).

Distribution of Processing Unit Binaries to the Running GSCs

By default, when a processing unit instance is provisioned to run on a certain GSC, the GSC downloads the processing unit archive from the GSM into the $GS_HOME/work/processing-units directory (The location of this directory can be overridden via the com.gs.work system property).

Downloading the processing unit archive to the GSC is the recommended option, but it can be disabled. In order to disable it, the pu.download deployment property should be set to false. This will not download the entire archive to the GSC, but will force the GSC to load the processing unit classes one at a time from the GSM via a URLClassLoader.

Processing Unit Deployment using various Deployment Tools

GigaSpaces provides several options to deploy a processing unit onto the Service Grid. Below you can find a simple deployment example with the various deployment tools for deploying a processing unit archive called myPU.jar located in the /opt/gigaspaces directory:

Deploying via code is done using the GigaSpaces Admin API. The following example shows how to deploy the myPU.jar processing unit using one of the available GSMs. For more details please consult the Admin API

Admin admin = new AdminFactory().addGroup("myGroup").create();

File puArchive = new File("/opt/gigaspaces/myPU.jar");

ProcessingUnit pu = admin.getGridServiceManagers().waitForAtLeastOne().deploy(

new ProcessingUnitDeployment(puArchive));

Example:

echo "Deploying onprem-space ..."

curl -X POST --header 'Content-Type: application/json' --header 'Accept: text/plain' -d '{"resource": "https://wan-gateway.s3.us-east-2.amazonaws.com/gcp/onprem-space-1.0-SNAPSHOT.jar","name": "onprem-space"}' 'http://34.172.74.128:8090/v2/spaces?name=onprem-space'

Deploying via the CLI is based on the deploy command. This command accepts various parameters to control the deployment process. These parameters are documented in full in the deploy CLI reference documentation..

> $GS_HOME/bin/gs.sh(bat) deploy myPU.jar

-

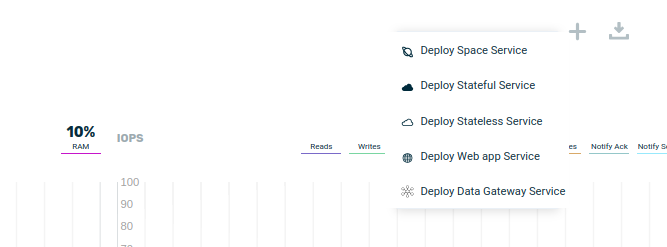

Open the GigaSpaces Ops Manager

-

Click + to display the Deploy menu

Hot Deploy

To enable business continuity in a better manner, having system upgrade without any downtime, here is a simple procedure you should follow when you would like to perform a hot deploy, upgrading a PU that includes both a business logic and a collocated embedded space:

- Upload the PU new/modified classes (i.e. polling container

SpaceDataEventimplementation or relevant listener class and any other dependency classes) to the PU deploy folder on all the GSM machines. - Restart the PU instance running the backup space. This will force the backup PU instance to reload a new version of the business logic classes from the GSM.

- Wait for the backup PU to fully recover its data from the primary.

- Restart the Primary PU instance. This will turn the existing backup instance to become a primary instance. The previous primary will turn into a backup, load the new business logic classes and recover its data from the existing primary.

- Optional - You can restart the existing primary to force it to switch into a backup instance again. The new primary will also use the new version of the business logic classes.

You can script the above procedure via the Administration and Monitoring API, allowing you to perform system upgrade without downtime.

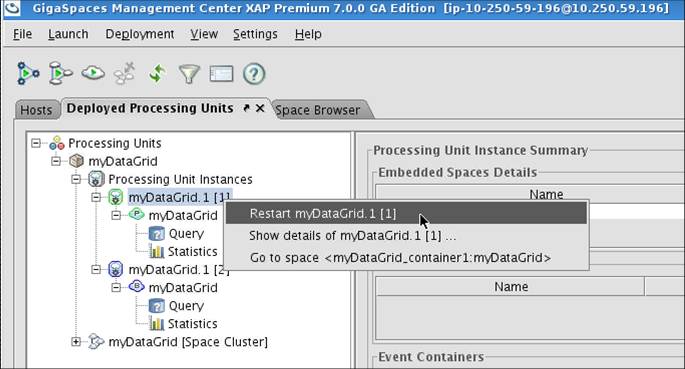

Restarting a Running PU via the GigaSpaces Management Center

The GigaSpaces Management Center has been deprecated and will be removed in a future release.

To restart a running PU (all instances) via the GigaSpaces Management Center:

- Start GigaSpaces Management Center.

- Navigate to the Deployed Processing Unit tab.

- Right-click the PU instance you want to restart

-

Select the restart menu option.

-

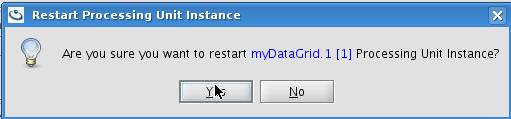

Confirm the operation.

-

Within few seconds the restart operation will be completed. If the amount of data to recover is large (few millions of objects), this might take few minutes.

-

Repeat steps 2-4 for all backup instances.

-

Repeat steps 2-4 for all primary instances. This will switch the relevant backup to be a primary mode where the existing primary will switch into a backup mode.

The Space class can't be changed with hot deploy, only business logic.

Restarting a Running PU via the Admin API

The ProcessingUnitInstance includes few restart methods you may use to restart a PU instance:

restart()

restartAndWait()

restartAndWait(long timeout, TimeUnit timeUnit)

Here is an example code that is using the ProcessingUnitInstance.restart to restart the entire PU instances in an automatic manner:

import java.util.concurrent.TimeUnit;

import java.util.logging.Logger;

import org.openspaces.admin.Admin;

import org.openspaces.admin.AdminFactory;

import org.openspaces.admin.pu.ProcessingUnit;

import org.openspaces.admin.pu.ProcessingUnitInstance;

import com.gigaspaces.cluster.activeelection.SpaceMode;

public class PUReatartMain {

static Logger logger = Logger.getLogger("PUReatart");

public static void main(String[] args) {

String puToRestart = "myPU";

Admin admin = new AdminFactory().createAdmin();

ProcessingUnit processingUnit = admin.getProcessingUnits().waitFor(

puToRestart, 10, TimeUnit.SECONDS);

if (processingUnit == null)

{

logger.info("can't get PU instances for "+puToRestart );

admin.close();

System.exit(0);

}

// Wait for all the members to be discovered

processingUnit.waitFor(processingUnit.getTotalNumberOfInstances());

ProcessingUnitInstance[] puInstances = processingUnit.getInstances();

// restart all backups

for (int i = 0; i < puInstances.length; i++) {

if (puInstances[i].getSpaceInstance().getMode() == SpaceMode.BACKUP) {

restartPUInstance(puInstances[i]);

}

}

// restart all primaries

for (int i = 0; i < puInstances.length; i++) {

if (puInstances[i].getSpaceInstance().getMode() == SpaceMode.PRIMARY) {

restartPUInstance(puInstances[i]);

}

}

admin.close();

System.exit(0);

}

private static void restartPUInstance(

ProcessingUnitInstance pi) {

final String instStr = pi.getSpaceInstance().getMode() != SpaceMode.PRIMARY?"backup" : "primary";

logger.info("restarting instance " + pi.getInstanceId()

+ " on " + pi.getMachine().getHostName() + "["

+ pi.getMachine().getHostAddress() + "] GSC PID:"

+ pi.getVirtualMachine().getDetails().getPid() + " mode:"

+ instStr + "...");

pi = pi.restartAndWait();

logger.info("done");

}

}

The GigaSpaces Hot Deploy tool allows business logic running as a PU to be refreshed (rolling PU upgrade) without any system downtime and data loss. The tool uses the hot deploy approach , placing new PU code on the GSM PU deploy folder and later restart each PU instance.

Application Deployment and Processing Unit Dependencies

An application is a logical abstraction that groups one or more Processing Units. Application allows:

- Parallel deployment of application processing units, while respecting dependency order.

- Undeployment of application processing units in reverse dependency order

Deployment Dependencies

The sample code below deploys an application named "data-app" which consists of a space and a feeder processing unit. The feeder processing unit instances are deployed only after the space deployment is complete (each partition has both a primary and a backup space instance).

Admin admin = new AdminFactory().addGroup("myGroup").create();

File feederArchive = new File("/opt/gigaspaces/myfeeder.jar");

Application dataApp = admin.getGridServiceManagers().deploy(

new ApplicationDeployment("data-app")

.addProcessingUnitDeployment(

new ProcessingUnitDeployment(feederArchive)

.name("feeder")

.addDependency("space"))

.addProcessingUnitDeployment(

new SpaceDeployment("space"))

);

for (ProcessingUnit pu : dataApp.getProcessingUnits()) {

pu.waitFor(pu.getTotalNumberOfInstances());

}

The processing unit dependencies can be described using an XML file.

Admin admin = new AdminFactory().addGroup("myGroup").create();

//The dist zip file includes feeder.jar and application.xml file

File application = new File("/opt/gigaspaces/examples/data/dist.zip");

//Application folders are supported as well

//File application = new File("/opt/gigaspaces/examples/data/dist");

Application dataApp = admin.getGridServiceManagers().deploy(

new ApplicationFileDeployment(application)

.create()

);

for (ProcessingUnit pu : dataApp.getProcessingUnits()) {

pu.waitFor(pu.getTotalNumberOfInstances());

}

Here is the content of the application.xml file (that resides alongside feeder.jar in dist.zip):

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context"

xmlns:os-admin="http://www.openspaces.org/schema/admin"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.openspaces.org/schema/admin http://www.openspaces.org/schema/16.1/admin/openspaces-admin.xsd">

<context:annotation-config />

<os-admin:application name="data-app">

<os-admin:space name="space" />

<os-admin:pu processing-unit="feeder.jar">

<os-admin:depends-on name="space"/>

</os-admin:pu>

</os-admin:application>

</beans>

The processing unit dependencies can be described using an XML file.

> $GS_HOME/bin/gs.sh(bat) deploy-application examples/data/dist.zip

Here is the content of the application.xml file (that resides alongside feeder.jar in dist.zip):

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context"

xmlns:os-admin="http://www.openspaces.org/schema/admin"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.openspaces.org/schema/admin http://www.openspaces.org/schema/16.1/admin/openspaces-admin.xsd">

<context:annotation-config />

<os-admin:application name="data-app">

<os-admin:space name="space" />

<os-admin:pu processing-unit="feeder.jar">

<os-admin:depends-on name="space"/>

</os-admin:pu>

</os-admin:application>

</beans>

The reason for imposing this dependency is that the space proxy bean in the feeder processing unit would fail to initialize if the space is not available. However, this restriction could be too severe since the feeder is a singleton processing unit. For example, if a container with the feeder and a space instance fails, the space is still available (the backup is elected to primary). However the feeder is not re-deployed until the space has all instances running, which will not happen unless a container is (re)started.

Adaptive SLA

The feeder can relax this restriction, by specifying a dependency of at least one instance per partition. Now the feeder is redeployed as long as the space has a minimum of one instance per partition. The downside of this approach is that during initial deployment there is a small time gap in which the feeder writes data to the space while there is only one copy of the data (one instance per partition).

Admin admin = new AdminFactory().addGroup("myGroup").create();

File feederArchive = new File("/opt/gigaspaces/myfeeder.jar");

// The ProcessingUnitDependenciesConfigurer supports dependencies on a minimum number of instances,

// on a minimum number of instances per partition, or waiting for a deployment of another processing unit to complete.

Application dataApp = admin.getGridServiceManagers().deploy(

new ApplicationDeployment("data-app")

.addProcessingUnitDeployment(

new ProcessingUnitDeployment(feederArchive)

.name("feeder")

.addDependencies(new ProcessingUnitDeploymentDependenciesConfigurer()

.dependsOnMinimumNumberOfDeployedInstancesPerPartition("space",1)

.create())

.addProcessingUnitDeployment(

new SpaceDeployment("space"))

);

for (ProcessingUnit pu : dataApp.getProcessingUnits()) {

pu.waitFor(pu.getTotalNumberOfInstances());

}

The processing unit dependencies can be described using an XML file.

Admin admin = new AdminFactory().addGroup("myGroup").create();

//The dist zip file includes feeder.jar and application.xml file

File application = new File("/opt/gigaspaces/examples/data/dist.zip");

//Application folders are supported as well

//File application = new File("/opt/gigaspaces/examples/data/dist");

Application dataApp = admin.getGridServiceManagers().deploy(

new ApplicationFileDeployment(application)

.create()

);

for (ProcessingUnit pu : dataApp.getProcessingUnits()) {

pu.waitFor(pu.getTotalNumberOfInstances());

}

Here is the content of the application.xml file (that resides alongside feeder.jar in dist.zip):

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context"

xmlns:os-admin="http://www.openspaces.org/schema/admin"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.openspaces.org/schema/admin http://www.openspaces.org/schema/16.1/admin/openspaces-admin.xsd">

<context:annotation-config />

<os-admin:application name="data-app">

<os-admin:space name="space" />

<os-admin:pu processing-unit="feeder.jar">

<os-admin:depends-on name="space" min-instances-per-partition="1"/>

</os-admin:pu>

</os-admin:application>

</beans>

The processing unit dependencies can be described using an XML file.

gigaspaces/bin/gs.sh deploy-application gigaspaces/examples/data/dist.zip

Here is the content of the application.xml file (that resides alongside feeder.jar in dist.zip):

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context"

xmlns:os-admin="http://www.openspaces.org/schema/admin"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-5.1.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-5.1.xsd

http://www.openspaces.org/schema/admin http://www.openspaces.org/schema/16.1/admin/openspaces-admin.xsd">

<context:annotation-config />

<os-admin:application name="data-app">

<os-admin:space name="space" />

<os-admin:pu processing-unit="feeder.jar">

<os-admin:depends-on name="space" min-instances-per-partition="1"/>

</os-admin:pu>

</os-admin:application>

</beans>

Undeployment Order

When it is time to undeploy dataApp, we would like the feeder to undeploy first, and only then the space. This would reduce the number of false warnings in the feeder complaining that the space is not available. The command dataApp.undeployAndWait(3, TimeUnit.MINUTES) un deploys the application in reverse dependency order and waits for at most 3 minutes for all processing unit instances to gracefully shutdown.

In the example above, the feeder instance will complete the teardown of all the spring beans, and only then the space would undeploy.

Monitoring Deployment Progress

The deployment progress can be monitored using the events provided by the Admin API. There are 4 provision events on a processing unit instance:

ProvisionStatus#ATTEMPT- an attempt to provision an instance on an availableGridServiceContainerProvisionStatus#SUCCESS- a successful provisioning attempt on aGridServiceContainerProvisionStatus#FAILURE- a failed attempt to provision an instance on an availableGridServiceContainerProvisionStatus#PENDING- a pending to provision an instance until a matchingGridServiceContaineris discovered

Admin admin = new AdminFactory().create();

admin.getProcessingUnits().getProcessingUnitInstanceProvisionStatusChanged().add( listener )

//or

admin.getProcessingUnits().getProcessingUnit("xyz").getProcessingUnitInstanceProvisionStatusChanged( listener )

//or

admin.addEventListener(new ProcessingUnitInstanceProvisionStatusChangedEventListener() {

@Override

public void processingUnitInstanceProvisionStatusChanged(

ProcessingUnitInstanceProvisionStatusChangedEvent event) {

ProvisionStatus newStatus = event.getNewStatus();

...

}

});

A compound listener (implements several interfaces) can be registered using the Admin.addEventListener(...).

Monitoring Processing Unit Instance Fault Detection

Using the member-alive-indicator (see Monitoring the Liveness of Processing Unit Instances ) the Grid Service Manager (GSM) actively monitors each processing unit instance. When an "is alive" check fails, it suspects that the processing unit instance is no longer alive, and retries to contact it (using the configured retries and timeouts in pu.xml under os-sla:member-alive-indicator). When all retries fail, the GSM reports that it detected a failure and tries to re-deploy it on an available Grid Service Container (GSC).

These member-alive-indicator transitions are reflected using the Admin API MemberAliveIndicatorStatus. Use the API to register for status changed events, and better visibility of GSM decisions based on the fault-detection mechanism. An alert is fired upon a fault- detection trigger, also visible in Web User Interface.

The MemberAliveIndicatorStatus has three states: ALIVE, SUSPECTING and FAILURE. The transition from SUSPECTING to FAILURE is final. From this state the processing unit instance is considered not alive. The transition from SUSPECTING back to ALIVE can occur if one of the retries succeeded in contacting the processing unit instance.

Admin admin = new AdminFactory().create();

admin.getProcessingUnits().getProcessingUnitInstanceMemberAliveIndicatorStatusChanged().add( listener )

//or

admin.getProcessingUnits().getProcessingUnit("xyz").getProcessingUnitInstanceMemberAliveIndicatorStatusChanged( listener )

//or

admin.addEventListener(new ProcessingUnitInstanceMemberAliveIndicatorStatusChangedEventListener() {

@Override

public void processingUnitInstanceMemberAliveIndicatorStatusChanged (

ProcessingUnitInstanceMemberAliveIndicatorStatusChangedEvent event) {

MemberAliveIndicatorStatus newStatus = event.getNewStatus();

...

}

});

A compound listener (implements several interfaces) can be registered using the Admin.addEventListener(...).